Project plan

-

Optimisation for machine learning

Workpackage 1 - Performance analysis of optimisation methods for machine learning

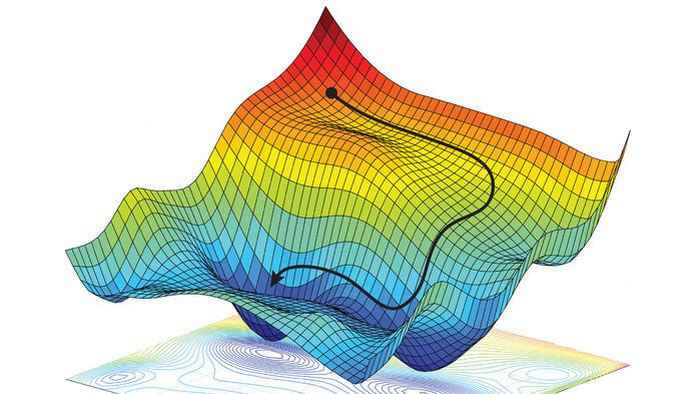

The optimisation model used to train the machine learning model has mathematical properties that could make it hard to solve. However, in practice it can often be solved efficiently with rather simple optimisation algorithms. This is an enigmatic feature of machine learning: it often works, but it is not well understood why it works. The goal of this workpackage is to understand the success of these simple optimisation methods, and to analyse the limits of its computational tractability.

Workpackage 2 - New optimisation methods for machine learning

In this workpackage we will develop new optimisation methods for obtaining more accurate machine learning models in a more efficient way. The main objectives in this workpackage will be to investigate and exploit structural properties of data, which can be geometric, algebraic or combinatorial, for the design of dedicated solution approaches. In particular we will investigate the use of polynomial functions in machine learning. The resulting training problem is a so-called polynomial optimisation problem that has been extensively studied in the optimisation field in recent years. Moreover, we will focus on better optimisation methods for classification trees.

-

Optimisation with machine learning

Workpackage 3 - Data-centric algorithm design

When we are faced with an optimisation problem there are two main alternatives for finding solutions. We can either develop an optimisation algorithm for finding a provably best solution, or we can settle for a high-quality solution that is obtained fast through an approximation algorithm. For optimisation algorithms we will investigate how machine learning can be used to guide the search to an optimal solution. When approximating, it is a challenge to derive algorithms that are not only guaranteed to perform well in the worst-case sense, as is mainly done today, but more interestingly for data that actually occur. We will develop new theoretical concepts for a beyond-worst-case analysis that incorporates data. In addition, we will develop algorithms that have a guaranteed performance according to the developed theory.

Workpackage 4 - Data-centric modeling

Traditionally, an optimisation model is built manually, but this is not always possible as some restrictions do not easily translate into mathematical functions. We will investigate how to use machine learning to generate a (part of the) model for a given problem based on the available data. Hence, this results in optimisation models that contain, e.g., a deep learning model or a random forest. The machine learning model is added as a constraint to the manually developed model. In this work package we will analyse which machine learning techniques can best be used, and how to solve optimisation models that also contain non-linear functions that result from machine learning.